Building an Advanced Alarm System with AI Facial Recognition

A guide for setting up a security system with Home Assistant using a Zigbee mesh network and DeepStack AI facial recognition without the cloud.

Home automation and physical security are hobbies I'm passionate about, with over 200 various IoT devices and sensors installed and hidden around my house. In this post, I’ll share how I set up a comprehensive security system using Home Assistant, Alarmo, Zigbee sensors, Frigate, Double-Take, and DeepStack.

End Goal

The aim is to create a security system that seamlessly integrates into your daily life without requiring compromises or becoming obtrusive while running locally on your network.

I won't share all my configurations to maintain the security of my setup, but I will provide enough information to help you build a similar system based on your needs.

Overview of the System

Logic and Automation:

Automation will bring all components together to work as a cohesive system. This is how it works:

- Arming the Alarm System:

- The alarm will set automatically every evening upon prolonged inactivity within a certain area, and automatically disarm when a mobile phone alarm goes off in the morning, as long as the phone's owner is detected to be home through their GPS location.

- When arming the building, the speakers announce which part of the house is being monitored using TTS. A notification is sent to mobile devices to confirm the system is armed.

- While the system is armed:

- Live notifications are sent to mobile devices of people's faces picked up by cameras around (not in) the perimeter of the armed area.

- If the door leading to the area is not locked (but closed), notifications of the door with a snapshot are sent to mobile devices as a reminder to lock it.

- The sensors will switch to monitoring state and if motion/presence (including breathing)/pressure/vibration/opening is detected in the monitored area, the system activates.

- Upon system activation:

- Sirens and speakers around the house will emit high-decibel tones. Snapshots from the cameras in the area are sent to mobile devices. Notifications of the security system being tripped, describing which sensor caused the activation, is sent to mobile devices.

- This guide does not cover automated calls to police (although can be done) as it highly depends on local regulations and whether automatically calling authorities is allowed in your area.

- The sirens will continue to emit tones until the system is disarmed, in which sensors revert to their original state and CCTV snapshots of people are no longer sent. When disarmed, the speakers confirm which area of the house is disarmed and sends a notification to users.

Systems and Devices:

- Home Assistant: The heart of the setup, with automations to manage the system.

- Zigbee Sensors: Low-power and reliable IoT sensors.

- Motion Sensors to detect general motion events with infrared.

- Presence Sensors to detect human presence, including breathing and micromovements.

- Contact Sensors to detect window and door open/close states.

- Pressure Sensors to detect weight increase when sat on/stepped on.

- Vibration Sensors to detect shocks and vibrations when the surface of a door/window is disturbed.

- Sirens to emit high-decibel tones when the alarm is triggered.

- Smoke and Fire detectors can be leveraged for their speaker component to act as part of the siren system during activation.

- Speakers for general announcements of the status of the alarm system.

- Door locks (though I'm generally not a fan of smart locks, I found the Switchbot, a key-turn based smart lock, to work well with HA) for reporting the front door's lock status.

- Zigbee Sensors: Low-power and reliable IoT sensors.

- Bluetooth proxies using ESP32 devices acting as a meshed bluetooth-over-wifi system to connect non-Zigbee components to Home Assistant from a long distance.

- Alarmo: An integration for Home Assistant to manage the alarm system using sensor states.

- MQTT: Used to receive events from the CCTV system and non-Zigbee sensors.

- Frigate NVR: Connects all CCTV cameras to an NVR that integrates with Home Assistant.

- DeepStack Facial Recognition with Double-Take Front-end: Ensures only actual humans are detected, mitigating false positives from Frigate.

Base Requirements

This is an advanced guide requiring prior knowledge of Linux, Home Assistant, and Zigbee Mesh Networking:

- Home Assistant set up and running with ZHA or ZigbeeMQTT configured with your sensors.

- Frigate set up and configured (refer to my other post for details).

- Docker server up and running (can use the same VM Frigate runs on).

- In order to rely on this system, digital security must be taken seriously:

- Do not open any ports, use a VPN to access your servers.

- Segregate the network to separate IoT and AV from your management network. Ensure devices cannot communicate externally.

- Set up firewall rules and block unnecessary DNS requests.

- Keep packages and integrations up to date and regularly test your system.

Install DeepStack

Install DeepStack to process images of faces and utilize its AI algorithm to determine if the person is familiar or unknown. This setup is extremely straightforward.

docker run --restart always -e VISION-FACE=True -v localstorage:/datastore -p 80:5000 deepquestai/deepstackThe DeepStack license key should already be activated. Navigate to http://{Host IP}:80 to validate the installation. Save the DeepStack key shown in the terminal after deployment.

Install Double-Take

Double-Take is an amazing front-end that connects the MQTT server, Frigate and DeepStack together within one UI.

It will receive frames from Frigate's MQTT topics that were recognised to be a person and run DeepStack's facial recognition algorithm on the frame to find a face. Then, photos of people are used for to train the model to process and recognise specific people's faces.

Deploy the stack:

version: '3.7'

volumes:

double-take:

services:

double-take:

container_name: double-take

image: skrashevich/double-take

restart: always

volumes:

- double-take:/.storage

ports:

- 3010:3000Navigate to http://{Host IP}:3010, then open the Config tab. Use the following config:

mqtt:

host: IP ADDRESS #Home Assistant's Host

user: MQTT-USERNAME #User name for MQTT's user.

password: MQTT-PASSWORD #Password for MQTT's user.

detect:

match:

save: true #Save frames of familiar faces.

base64: false

confidence: 60 #Minimum confidence needed for determining a match with a familiar face

purge: 8 #How many hours the image file will be saved for

min_area: 10000 #Minimum area in pixels to consider a match

unknown:

save: true #Save frames of unknown faces.

base64: false

confidence: 40 #Minimum confidence needed for determining an unknown face

purge: 168 #Keep photos of unknown faces around for a week

min_area: 0 #Minimum area in pixels to save an unknown face

frigate:

url: http://IP ADDRESS:PORT #Frigate's IP and port number

cameras: #List Frigate cameras to process images from

- Front

detectors:

deepstack:

url: http://IP ADDRESS:80 #DeepStack's IP address and port number

key: sha256:KEY #Key generated in CLI when DeepStack was deployed.

timeout: 15 #Seconds before the request times out

opencv_face_required: false # require opencv to find a face before processing with detector

cameras: #List Frigate cameras to process images from

- FrontTrain Faces

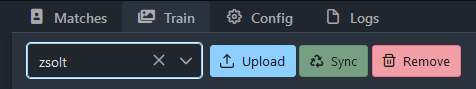

Training faces with Double-Take is super easy.

- Navigate to the Train tab, select the dropdown.

- Click Add New and enter a name for the person.

- Click Create.

- There are two options to train:

- Images sourced directly from the security cameras:

- Navigate to the Matches tab, select the dropdown, then select the person you created.

- Select the relevant CCTV frames, then click Train.

- Images sourced directly from the security cameras:

- Navigate to the Train tab to check the progress.

- Manually uploading photos of a person:

- Click Upload, select the images and upload them.

The more photos, the better accuracy, try using images from all angles to ensure a good match.

Verify Sensors

After the training is complete, Double-Take will send processed data to Home Assistant through MQTT. The message will state the person's label (your name or unknown). Based on this label, automations can be created within Home Assistant later. To verify the sensors, navigate to Home Assistant:

- Developer Tools > States.

- Open Current Entities and search for double_take. You should see the trained face's label and the unknown person's entity:

Install Alarmo

Alarmo is a fantastic integration that leverages your existing smart sensors. It has its own alarm control panel and allows configuring sensors for different type of alarm events, like arm away, arm home, disarm (and more).

Click the button below to install Alarmo through the HACS repository, or install manually:

It is highly recommended to check out the official documentation from Alarmo's page if this is your first time using an alarm system.

Configure Alarmo

This setup will focus only on Arm Away, Arm Home and Disarm states.

After installation, navigate to Alarmo on the side navigation menu of Home Assistant. The settings I use are:

General Tab:

- Disarm After Trigger: False

- Enable MQTT: False

- Enable Alarm Master: True (you only need to enable this if you are monitoring multiple buildings, where the Master alarm is able to control all of them at once)

- Armed Away:

- Status: Enabled

- Exit Delay: none (armed from outside the building)

- Entry Delay: none (disarmed through NFC or mobile phone)

- Trigger Time: 60 minutes (as the alarm expects me to disarm the sytem manually after an activation occurs)

- Select the sensors you want to use when the state is armed away.

- Armed Home:

- Same as Armed Away.

- Select the sensors you want to use when the state is armed home.

Difference between Armed Away and Armed Home:

The primary difference is the selection of sensors. Typically, in the Armed Home state, internal motion detectors are not activated, allowing for movement within the house without triggering the alarm. In contrast, Armed Away usually activates all sensors, including internal motion detectors, as the assumption is that no one should be inside the building when this mode is active.

Actions Tab:

The actions tab has basic automations for notifications and actions that can be performed when an alarm event occurs. All automations will be handled separately, within Home Assistant's automations instead of Alarmo's automation menu as HA gives more flexibility for automations.

Notifications are used to send mobile notifications. I have set up notifications for every type of alarm event change for every key family member's device. At the time of writing, notifications must be per device, per event, per building, so I had to set up a lot of them to cover all scenarios and endpoint notifications.

This example will create a notification for when the system is armed home. Feel free to create more notifications to cover event types.

Click New Notification:

- Event: Alarm is Armed

- Area: Relevant area, Alarmo by default.

- Mode: leave as is

- Task:

- Target: Select the target mobile.

- Title: Alarm System

- Message: The alarm is set to {{arm_mode}}.

- Name: Try to set a naming standard if you are planning on creating more than one notification. I follow this standard:

- Person - Building Name - Alarm Mode (eg.: Zsolt - House - Disarm)

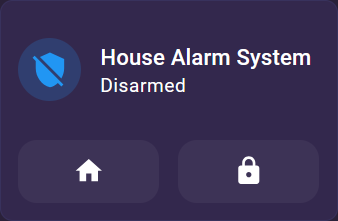

Add the Control Panel Card to the Dashboard:

Alarmo ships with a default control panel (can be customised, Mushroom Cards also have an alternative panel you can use) that can be added to your dashboard right away. To do so:

- Navigate to Overview > Add Card > Alarm Panel Card.

Set up Automations

The Alarmo alarm system is set up, but no actions are being performed yet. To perform various actions depending on the current state of the alarm, create automations under Settings > Automations and Scenes > Create Automation.

The following are simplified YAML automations that only focus on one instance per device type (eg.: one siren, one speaker, one mobile phone).

Arm Away Automation:

alias: Alarm - Arming Away - House

description: ""

trigger:

- platform: device

device_id: {{ALARMO DEVICE}}

domain: alarm_control_panel

entity_id: {{ALARMO AREA}}

type: armed_away

condition: []

action:

- parallel:

- service: media_player.play_media

target:

entity_id: media_player.living_room

data:

media_content_id: media-source://media_source/local/away.mp3

media_content_type: audio/mpeg

metadata:

title: away.mp3

thumbnail: null

media_class: music

children_media_class: null

navigateIds:

- {}

- media_content_type: app

media_content_id: media-source://media_source

- if:

- condition: device

device_id: {{ALARMO DEVICE}}

domain: alarm_control_panel

entity_id: {{ALARMO AREA}}

type: is_armed_away

then:

- parallel:

- service: tts.google_translate_say

metadata: {}

data:

cache: false

entity_id: media_player.living_room

message: The alarm system in the house is armed away.

mode: single

When arming away the building, make an announcement on connected speakers using Text-to-Speech with a notification sound.

Arm Home Automation:

alias: Alarm - Arming Home - House

description: ""

trigger:

- platform: device

device_id: {{ALARMO DEVICE}}

domain: alarm_control_panel

entity_id: {{ALARMO AREA}}

type: armed_home

condition: []

action:

- parallel:

- service: media_player.play_media

target:

entity_id: media_player.living_room

data:

media_content_id: media-source://media_source/local/home.mp3

media_content_type: audio/mpeg

metadata:

title: home.mp3

thumbnail: null

media_class: music

children_media_class: null

navigateIds:

- {}

- media_content_type: app

media_content_id: media-source://media_source

- if:

- condition: device

device_id: {{ALARMO DEVICE}}

domain: alarm_control_panel

entity_id: {{ALARMO AREA}}

type: is_armed_home

then:

- parallel:

- service: tts.google_translate_say

metadata: {}

data:

cache: false

entity_id: media_player.living_room

message: The alarm system in the house is armed home.

mode: single

When arming home the building, make an announcement on connected speakers using Text-to-Speech with a notification sound.

Disarm Automation:

alias: Alarm - Disarming - House

description: ""

trigger:

- platform: device

device_id: {{ALARMO DEVICE}}

domain: alarm_control_panel

entity_id: {{ALARMO AREA}}

type: disarmed

condition: []

action:

- service: siren.turn_off

data: {}

target:

entity_id: siren.audible_alarm

- parallel:

- service: media_player.play_media

target:

entity_id: media_player.living_room

data:

media_content_id: media-source://media_source/local/disarm.mp3

media_content_type: audio/mpeg

metadata:

title: disarm.mp3

thumbnail: null

media_class: music

children_media_class: null

navigateIds:

- {}

- media_content_type: app

media_content_id: media-source://media_source

- if:

- condition: device

device_id: {{ALARMO DEVICE}}

domain: alarm_control_panel

entity_id: {{ALARMO AREA}}

type: is_disarmed

then:

- parallel:

- service: tts.google_translate_say

metadata: {}

data:

cache: false

entity_id: media_player.living_room

message: The alarm system in the house is disarmed.

mode: single

When disarming the building, stop the alarm siren and make an announcement on connected speakers using Text-to-Speech with a notification sound.

Triggered Automation:

alias: Alarm - Triggering - House

description: ""

trigger:

- platform: device

device_id: {{ALARMO DEVICE}}

domain: alarm_control_panel

entity_id: {{ALARMO AREA}}

type: triggered

condition: []

action:

- parallel:

- service: siren.turn_on

data: {}

target:

entity_id: siren.audible_alarm

- service: notify.mobile_app

data:

data:

media_stream: alarm_stream_max

tts_text: House Alarm System Triggered

ttl: 0

priority: high

title: 🚨ALARM SYSTEM🚨

message: TRIGGERED HOUSE ALARM

- repeat:

until:

- condition: device

device_id: {{ALARMO DEVICE}}

domain: alarm_control_panel

entity_id: {{ALARMO AREA}}

type: is_disarmed

sequence:

- service: media_player.play_media

target:

entity_id: media_player.living_room

data:

media_content_id: >-

media-source://media_source/local/triggered.mp3

media_content_type: audio/mpeg

metadata:

title: triggered.mp3

thumbnail: null

media_class: music

children_media_class: null

navigateIds:

- {}

- media_content_type: app

media_content_id: media-source://media_source

- delay:

hours: 0

minutes: 0

seconds: 8

milliseconds: 0

enabled: true

mode: single

When the alarm is triggered, notify mobile devices, turn on the siren, then play an alarm sound on the speaker every 8 seconds in a loop until the alarm system is disarmed.

Unknown Face Automation:

alias: Alarm - CCTV Unknown Person

description: ""

trigger:

- platform: state

entity_id: sensor.double_take_unknown

condition: []

action:

- if:

- condition: or

conditions:

- condition: device

device_id: {{ALARMO DEVICE}}

domain: alarm_control_panel

entity_id: {{ALARMO AREA}}

type: is_armed_away

- condition: device

device_id: {{ALARMO DEVICE}}

domain: alarm_control_panel

entity_id: {{ALARMO AREA}}

type: is_armed_home

- condition: device

device_id: {{ALARMO DEVICE}}

domain: alarm_control_panel

entity_id: {{ALARMO AREA}}

type: is_triggered

then:

- service: notify.mobile_app

data:

message: >-

Person by the {{trigger.to_state.state}} while alarm system is

armed!

title: CCTV

data:

image: >-

http://{IP OF DOUBLE-TAKE SERVER}:3010/api/storage/matches/{{trigger.to_state.attributes.unknown.filename}}?box=true&token={{trigger.to_state.attributes.token}}

mode: parallelWhen the alarm is in any state that isn't disarmed, send image notifications of unfamiliar faces recognised by DeepStack.

Door Lock Automation:

alias: Alarm - Front Door - Alarm On Unlock Notification

description: ""

trigger:

- platform: device

device_id: {{DOOR LOCK}}

domain: lock

entity_id: {{DOOR LOCK ENTITY}}

type: unlocked

for:

hours: 0

minutes: 0

seconds: 0

condition:

- condition: or

conditions:

- condition: device

device_id: {{ALARMO DEVICE}}

domain: alarm_control_panel

entity_id: {{ALARMO AREA}}

type: is_armed_away

- condition: device

device_id: {{ALARMO DEVICE}}

domain: alarm_control_panel

entity_id: {{ALARMO AREA}}

type: is_armed_home

action:

- service: camera.snapshot

data:

filename: /media/front_door_alarm.jpg

target:

device_id: {{CAMERA}}

- service: notify.mobile_app

data:

title: Front Door

message: Door is unlocked.

data:

image: /media/local/front_door_alarm.jpg

mode: single

When the alarm system is set to arm home or arm away, if the front door is not locked with a key, take a photo and send a notification to serve as a reminder to lock the door.

Automatically Set Arm Home Automation:

alias: Alarm - House Auto Arm Home

description: ""

trigger:

- platform: time

at: "22:00:00"

- platform: time

at: "23:00:00"

condition:

- type: is_not_open

condition: device

device_id: {{DOOR}}

entity_id: {{DOOR CLOSE ENTITY}}

domain: binary_sensor

for:

hours: 0

minutes: 30

seconds: 0

- condition: device

device_id: {{ALARMO DEVICE}}

domain: alarm_control_panel

entity_id: {{ALARMO AREA}}

type: is_disarmed

- condition: zone

entity_id: person.USER

zone: zone.home

- type: is_no_motion

condition: device

device_id: {{PRESENCE SENSOR}}

entity_id: {{PRESENCE SENSOR PRESENCE ENTITY}}

domain: binary_sensor

- condition: state

entity_id: sensor.phone_battery_state

state: charging

- type: is_not_occupied

condition: device

device_id: {{CAMERA}}

entity_id: {{CAMERA PERSON OCCUPANCY}}

domain: binary_sensor

for:

hours: 0

minutes: 30

seconds: 0

action:

- service: alarm_control_panel.alarm_arm_home

metadata: {}

data: {}

target:

entity_id: alarm_control_panel.house

mode: single

At 10PM (and if that fails, 11PM), automatically arm home, if the all doors have been closed for 30 minutes, the alarm is currently disarmed, someone is home, their phone is charging, and if there is nobody occupying certain areas for 30 minutes. Subsequently, this will trigger the Arm Home automation above.

Automatically Disarm Automation:

alias: Alarm - Disarm based on Mobile Alarm Clock

description: ""

trigger:

- platform: time

at: sensor.phone_next_alarm

condition:

- condition: zone

entity_id: person.USER

zone: zone.home

- condition: or

conditions:

- condition: device

device_id: {{ALARMO DEVICE}}

domain: alarm_control_panel

entity_id: {{ALARMO AREA}}

type: is_armed_away

- condition: device

device_id: {{ALARMO DEVICE}}

domain: alarm_control_panel

entity_id: {{ALARMO AREA}}

type: is_armed_home

action:

- device_id: {{ALARMO DEVICE}}

domain: alarm_control_panel

entity_id: {{ALARMO AREA}}

type: disarm

mode: single

If the system is armed home or armed away, disarm the alarm when their a phone alarm goes off as long as the person is home based on their geographical location.